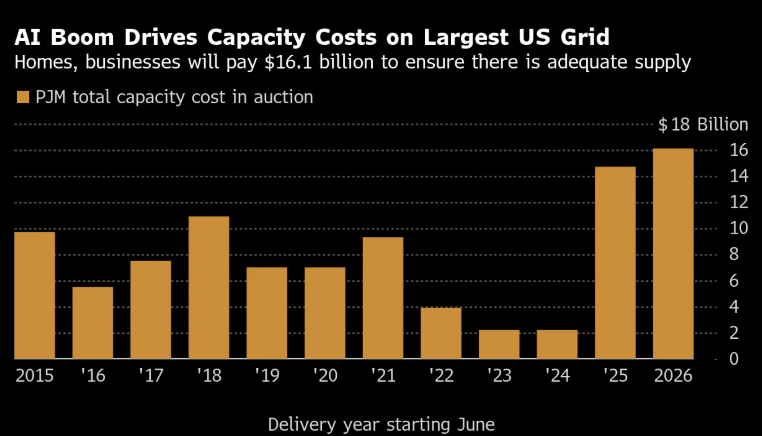

I’ve been watching the tech headlines with a mixture of awe and growing concern lately. The numbers being thrown around for AI infrastructure investments are staggering, but I can’t shake the feeling that we’re building a house of cards on a foundation that wasn’t designed to support it.

The Investment Tsunami

Let me put the recent announcements into perspective. We’re not talking about incremental growth here – we’re witnessing what might be the largest infrastructure buildout in modern history:

| Company/Partnership | Investment Amount | Focus Area |

|---|---|---|

| Nvidia + OpenAI | $100 billion | AI data centers with Nvidia chips |

| SoftBank + Oracle | $500 billion | Cloud infrastructure and data centers |

| Amazon, Google, Meta, Microsoft | $325 billion | Combined data center expansion |

| Total | $925 billion | AI and cloud infrastructure |

That’s nearly a trillion dollars flowing into data center construction. To put that in context, that’s more than the GDP of most countries, and it’s all happening within the next few years.

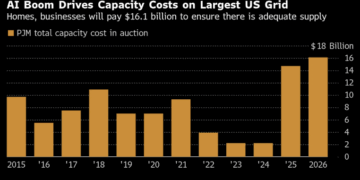

Why Data Centers Are Energy Monsters

Here’s what keeps me up at night: every one of these data centers is essentially a massive electricity consumption machine. Unlike traditional computing, AI workloads require GPUs running at full capacity 24/7. A single high-end AI training facility can consume as much power as a small city.

Consider this: a typical data center uses about 50-100 megawatts of power. The new AI-focused facilities? They’re looking at 300-500 megawatts each. For comparison, that’s enough electricity to power roughly 300,000 to 500,000 homes continuously.

“The energy requirements for AI are not just growing – they’re exploding. We’re looking at a 10x increase in power density compared to traditional data centers.” – Industry analysis from various energy sector reports

Our Grid’s Current Reality Check

Meanwhile, our electrical grid is already showing strain. Every summer, we hear warnings about rolling blackouts when air conditioners kick into high gear. The push toward electric vehicles is adding another layer of demand that our infrastructure wasn’t originally designed to handle.

The numbers tell a sobering story:

- Summer peak demand: Many regions operate at 90%+ capacity during heat waves

- EV adoption: Each EV adds roughly 4,000 kWh annually to household consumption

- Grid age: Much of our electrical infrastructure was built decades ago for a different world

The Math Doesn’t Add Up

Let me walk through some rough calculations that have been bothering me:

If just half of that $925 billion investment translates into new data centers, and each major facility requires 400 megawatts on average, we’re looking at potentially 500+ new facilities. That’s 200,000 megawatts of additional electrical demand – equivalent to about 200 large power plants running continuously.

For context, the entire US electrical grid currently has about 1,200,000 megawatts of capacity. We’re talking about adding roughly 15-20% more demand to a system that’s already stretched thin.

The Questions Nobody’s Asking

While everyone’s focused on the exciting possibilities of AI advancement, I keep coming back to these fundamental infrastructure questions:

- Where will the electricity come from? Building power plants takes 5-10 years. Data centers can be built in 18-24 months.

- Can the transmission lines handle it? It’s not just about generating power – you need to move it from where it’s made to where it’s needed.

- What about redundancy? These AI systems require 99.9%+ uptime. That means backup power, redundant grid connections, and failsafe systems.

- Environmental impact? All this electricity has to come from somewhere. Are we trading AI progress for climate goals?

Potential Solutions on the Horizon

I don’t want to be all doom and gloom. There are some promising developments:

On-site Power Generation: Companies like Microsoft and Google are investing heavily in nuclear and renewable energy sources located directly at their data centers.

Grid Modernization: Smart grid technologies could help balance loads more efficiently, though this requires massive infrastructure investment.

Efficiency Improvements: New chip designs and cooling technologies are making data centers more energy-efficient, though not fast enough to offset the growth in demand.

Strategic Location Planning: Placing data centers near existing power generation facilities or in regions with excess capacity.

The Infrastructure Investment We’re Not Talking About

Here’s what really concerns me: for every dollar being invested in data centers, we probably need 50 cents invested in electrical infrastructure. That’s potentially $400+ billion in grid improvements, new power plants, and transmission lines that I don’t see being adequately planned or funded.

The private sector is moving at Silicon Valley speed, but our electrical grid moves at utility company speed – and there’s a dangerous mismatch in those timelines.

A Call for Infrastructure Realism

I’m not anti-AI or anti-progress. The potential benefits of these AI advancements could be transformative for society. But I believe we need to have an honest conversation about the infrastructure required to support this buildout.

We need coordinated planning between tech companies, utilities, and government agencies. We need to fast-track grid improvements in regions earmarked for major data center development. And we need to consider the electrical infrastructure as seriously as we consider the computing infrastructure.

The $925 billion AI investment boom represents incredible opportunity, but only if we can keep the lights on to power it. The time to address these infrastructure challenges is now, before we find ourselves with world-class AI capabilities running on a third-world electrical grid.

What are your thoughts on this infrastructure challenge? Have you noticed strain on your local electrical grid? I’d love to hear your perspectives in the comments.

For more insights on technology infrastructure and its broader implications, follow my blog and join the conversation about building a sustainable digital future.