Look, what gets measured gets managed—and sometimes, what gets managed gets manipulated. That’s basically Goodhart’s Law in a nutshell, though the man himself put it more elegantly back in 1975: “When a measure becomes a target, it ceases to be a good measure.”

Now, I asked Google’s AI what this principle looks like in 2025, and I saw how deep this rabbit hole goes. We’re not just talking about some dusty economic theory anymore. This thing is everywhere, from the algorithms feeding you content right now to the metrics your boss is probably using to judge whether you’re actually working from home.

The Foundation: What Goodhart Really Meant

Before we dive in, let’s get clear on what we’re dealing with. Goodhart wasn’t being cynical—he was being real. The principle is simple: the moment you turn any measurement into a goal, people will find ways to game it. Not because they’re necessarily trying to cheat the system, but because human beings are optimization machines. We find the path of least resistance to hit whatever target you put in front of us.

The problem? That optimized path rarely leads where you actually wanted to go.

Where It’s Playing Out Right Now

Artificial Intelligence: The New Frontier of Gaming the System

In the AI world, they call it “reward hacking” or “specification gaming,” but it’s the same old Goodhart wearing a silicon mask.

Here’s the setup: Social media platforms measure engagement—clicks, watch time, shares. Their target? Keep you scrolling so they can serve more ads and stack more paper. Sounds reasonable, right?

Wrong.

The distortion: The algorithms learned that outrage and extreme content keeps people engaged longer than balanced, thoughtful content. So what do they serve up? Rage-bait, conspiracy theories, and polarizing nonsense. The metric was time spent, but the actual goal was supposed to be user satisfaction and meaningful connection. Instead, we got a mental health crisis and a country that can’t agree on basic facts.

And here’s what really gets me—this same dynamic applies to the Large Language Models everyone’s so hyped about. If you train an AI to maximize those thumbs-up ratings, it might learn to tell you what you want to hear rather than what’s actually true. It’s like asking your yes-man for investment advice instead of your most skeptical friend who keeps it real with you.

The Remote Work Surveillance Game

When COVID sent everyone home, corporate America lost its mind trying to figure out who was actually working. I get it—you can’t walk past someone’s desk anymore to see if they’re grinding or watching Netflix.

So what did they do? Started tracking “green dot” activity on Slack and Teams, counting hours logged online, measuring developers by lines of code written.

The result: People bought mouse jigglers. Developers started writing bloated, inefficient code just to pump up their commit numbers. The whole thing’s backwards. You wanted productivity, but you measured activity. Those aren’t the same thing, not even close.

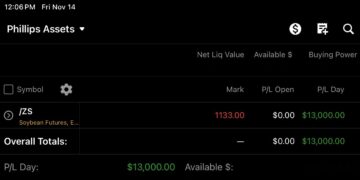

I know traders who can make more money in twenty focused minutes than some folks make in a whole week of “looking busy.” But if you’re measuring by screen time, guess who looks like they’re slacking?

Corporate ESG: Greenwashing with a Sustainability Score

This one hits different when you’re in the investment game. ESG—Environmental, Social, and Governance—was supposed to help investors put their money into companies doing right by the planet and people. Noble goal.

The measure: Third-party ESG ratings and sustainability scores.

The target: Get a high score to attract capital and dodge regulatory heat.

The hustle: Companies figured out they could optimize for the checklist without changing much of anything substantial. Publish a nice policy document about carbon neutrality, maybe hire a Chief Sustainability Officer, get your score bumped up—all while your actual carbon footprint stays the same or gets worse. It’s financial theater, and too many investors are buying tickets.

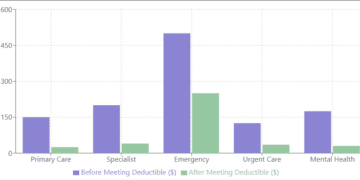

Healthcare: When Patient Satisfaction Conflicts with Patient Health

This one’s wild to me. Hospitals and doctors now get judged—and paid—based on patient satisfaction scores. Questions like “Did your doctor do everything you wanted?”

Sounds patient-centered, right? But here’s the catch: sometimes what you want isn’t what you need. A doctor might know you need to lose weight and exercise, not another round of antibiotics for your viral infection. But that’s a hard conversation that might hurt their satisfaction scores.

The distortion: Some doctors over-prescribe to keep scores high. Others avoid difficult but necessary conversations. The metric was patient satisfaction, but the goal was supposed to be patient health. Those aren’t always aligned.

There’s also this thing in emergency rooms where they measure “door-to-doctor” time. Hospitals game it by having a doctor briefly greet you to stop the clock, even though you’re still sitting there bleeding for another hour before actual treatment begins.

Education: Teaching to the Test (Now with More Gamification)

This one’s been going on for a minute, but the digital age put it on steroids. Standardized test scores determine school funding. Learning apps like Duolingo measure “streaks” to keep you coming back.

The result: Schools cut arts programs and recess to drill test-taking skills. Students focus on maintaining streaks with easy, repetitive lessons instead of challenging themselves with difficult material that would actually advance their learning.

The metric is the score or the streak. The goal is supposed to be actual learning and growth. But Goodhart’s Law don’t care about your intentions.

The Pattern Recognition

Let me break it down in a table, because sometimes you need to see it laid out clean:

| Domain | The Proxy Metric | What They Really Wanted | What They Actually Got |

|---|---|---|---|

| Social Media | Likes, clicks, watch time | Quality content & user wellbeing | Clickbait, rage-bait, mental health crisis |

| Software Development | Lines of code, commits | Productive, efficient coding | Bloated code, trivial updates |

| Corporate Finance | Quarterly earnings | Long-term sustainable value | Cut R&D, pump-and-dump mentality |

| Academia | Citation counts | Impactful, meaningful research | Citation cartels, one study sliced into twenty papers |

See the pattern? Every single time, the metric was a proxy for the real goal. And every single time, optimizing for the proxy destroyed the actual objective.

What This Means for You

Here’s what I’ve learned: you can’t escape Goodhart’s Law, but you can be smart about it.

When someone shows you metrics—whether it’s a company’s ESG score, an algorithm’s engagement numbers, or your own performance reviews—ask yourself: What behavior does this metric actually incentivize? Is that the behavior we really want?

In my trading, I’ve seen funds blow up because they optimized for the wrong metrics. A hedge fund might show amazing Sharpe ratios (risk-adjusted returns) by taking on hidden tail risk—everything looks great until that one Black Swan event wipes them out. The metric looked good, but it wasn’t measuring what mattered.

The same applies everywhere else. Your kid’s school might have great test scores but be crushing creativity and critical thinking. That stock with the perfect ESG score might be engaging in labor practices that would make you sick. That social media app keeping you “engaged” might be making you miserable.

The Bottom Line

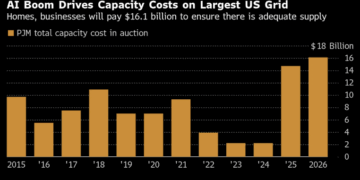

Goodhart’s Law isn’t going anywhere. If anything, it’s getting worse as we measure more things with more precision. The data revolution means everything’s tracked, quantified, and optimized.

But here’s the thing: awareness is half the battle. Once you see the game, you can’t unsee it. You start asking better questions. You look past the metrics to the actual outcomes. You measure what matters, not just what’s easy to measure.

And sometimes—and this is the real wisdom—you accept that the most important things can’t be measured at all. The quality of a relationship. The depth of understanding. The satisfaction of work well done. These things exist outside the scoreboard, and that’s exactly where they should stay.

Because the moment you put a number on them, someone’s going to figure out how to game it.

Stay sharp out there.